ECVP talk: Learning about Shape, Material, and Illumination by Predicting Videos

ECVP talk: Learning about Shape, Material, and Illumination by Predicting Videos

Abstract

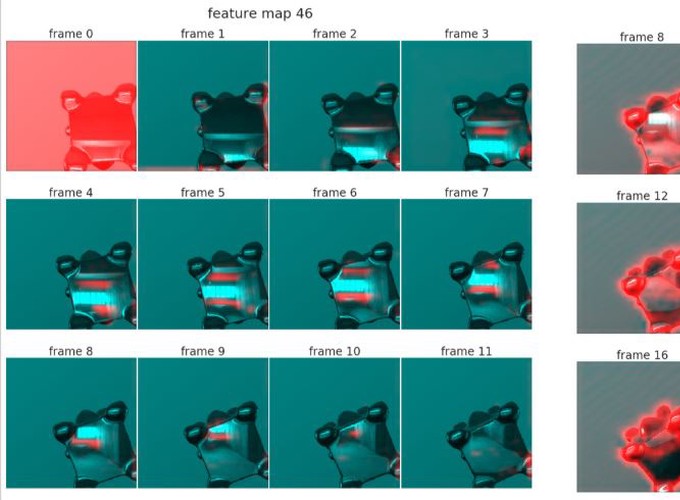

Unsupervised deep learning provides a framework for understanding how brains learn rich scene representations without ground-truth world information. We rendered 10,000 videos of irregularly-shaped objects moving with random rotational axis, speed, illumination, and reflectance. We trained a recurrent four-layer ‘PredNet’ to predict the next frame in each video. After training, object shape, material, position, and lighting angle could be predicted for new videos by taking linear combinations of unit activations. Representations are hierarchical, with scene properties better estimated from deep than shallow layers (e.g., reflectance can be predicted with R2=0.78 from layer 4, but only 0.43 from layer 1). Different properties emerge with different temporal dynamics: reflectance can be decoded at frame 1, whereas decoding of object shape and position improve over frames, showing that information is integrated over time to disambiguate scene properties. Visualising single ‘neurons’ revealed selectivity for distal features: a ‘shadow unit’ in layer 3 responds exclusively to image locations containing the object’s shadow, while a ‘salient feature’ unit appears to track high curvature points on the object. Comparing network predictions to human judgments of scene properties reveals they rely on similar image information. Our findings suggest unsupervised objectives lead to representations that are useful for many estimation tasks.