VSS talk: Unsupervised Neural Networks Learn Idiosyncrasies of Human Gloss Perception

VSS talk: Unsupervised Neural Networks Learn Idiosyncrasies of Human Gloss Perception

Abstract

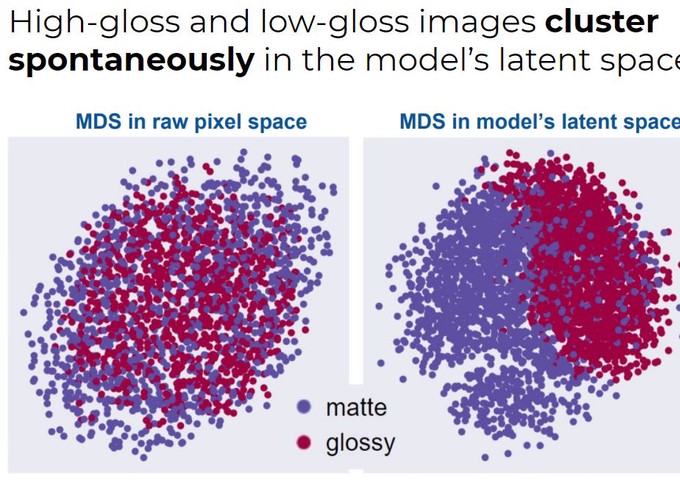

We suggest that characteristic errors in gloss perception may result from the way we learn vision more generally, through unsupervised objectives such as efficiently encoding and predicting proximal image data. We tested this idea using unsupervised deep learning. We rendered 10,000 images of bumpy surfaces of random albedos, bump heights, and illuminations, half with high-gloss and half with low-gloss reflectance. We trained an unsupervised six-layer convolutional PixelVAE network to generate new images based on this dataset. This network learns to predict pixel values given the previous ones, via a highly compressed 20-dimensional latent representation, and receives no information about gloss, shape or lighting during training. After training, multidimensional scaling revealed that the latent space substantially disentangles high and low gloss images. Indeed, a linear classifier could classify novel images with 97% accuracy. Samples generated by the network look like realistic surfaces, and their apparent glossiness can be modulated by moving systematically through the latent space. We generated 30 such images, and asked 11 observers to rate glossiness. Ratings correlated moderately well with gloss predictions from the model (r = 0.64). To humans, perceived gloss can be modulated by lighting and surface shape. When the model was shown sequences varying only in bump height, it frequently exhibited non-constant gloss values, most commonly, like humans, seeing flatter surfaces as less glossy. We tested whether these failures of constancy in the model matched human perception for specific stimulus sequences. Nine observers viewed triads of images from five sequences in a Maximum Likelihood Difference Scaling experiment. Gloss values within the unsupervised model better predicted human perceptual gloss scales than did pixelwise differences between images (p= 0.042). Unsupervised machine learning may hold the key to fully explicit, image-computable models of how we learn meaningful perceptual dimensions from natural data.